The Privacy Paradox: Can You Train on Enterprise Data Without Leaking Trade Secrets?

The Data That Makes AI Smart Can Also Make You Vulnerable

Enterprise AI has a hunger problem.

The more proprietary data you feed into models—internal documents, source code, customer interactions, financial records—the more useful and differentiated your AI becomes. But that same data is often your most valuable intellectual property.

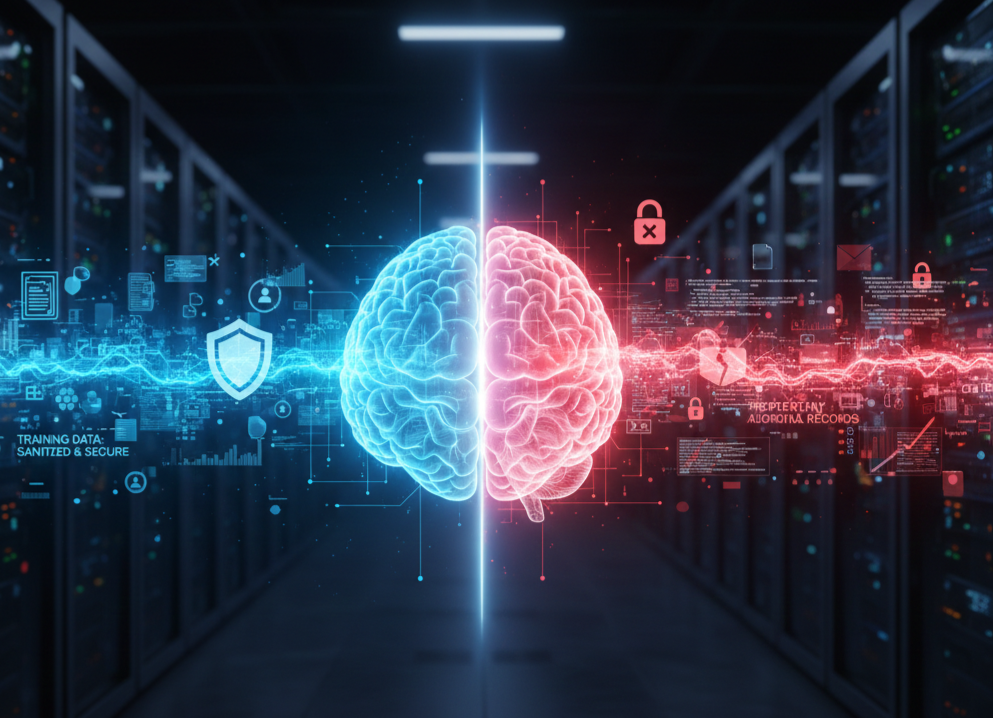

This creates a fundamental tension:

How do you train AI on enterprise data without exposing trade secrets, sensitive information, or regulated data?

This is the Privacy Paradox at the heart of modern AI adoption—and there is no single silver-bullet solution.

Why Enterprise Data Is Both Essential and Dangerous

Why You Need It

Generic models trained on public data:

Lack domain context

Miss internal terminology

Fail on company-specific workflows

Enterprise value comes from:

Proprietary knowledge

Internal processes

Historical decisions

Customer-specific insights

Without enterprise data, AI remains shallow.

Why It’s Dangerous

Enterprise data often contains:

Trade secrets

Source code

Pricing strategies

Customer PII

Legal and contractual information

Once exposed—even unintentionally—the damage is often irreversible.

How Trade Secrets Leak in AI Systems

Most data leaks are not malicious. They are architectural.

1. Training Data Retention and Model Memorization

LLMs can memorize rare or unique data, especially when:

Datasets are small

Information is highly specific

Fine-tuning is aggressive

This can lead to:

Verbatim regurgitation

Partial reconstruction of sensitive content

A model that “remembers” is a model that leaks.

2. Inference-Time Leakage

Even without training, data can leak during usage:

Prompts include sensitive context

Outputs expose internal logic

Context windows mix users or sessions

This is especially risky in:

Shared models

Multi-tenant environments

3. Third-Party Model Providers

When using external LLMs:

Data may be logged

Prompts may be stored

Usage may contribute to retraining

Even when providers promise safeguards, control is reduced.

4. Tool-Connected and Agentic Systems

AI agents with tool access can:

Move data across systems

Combine sensitive sources

Expose data through logs or actions

Privacy failures here are often indirect and delayed.

The Illusion of “Safe by Default” AI

Common but flawed assumptions:

❌ “The model provider guarantees privacy.”

❌ “We’re not training, only prompting.”

❌ “Our data isn’t valuable enough to leak.”

❌ “Access controls alone are sufficient.”

In reality, privacy is an architectural property, not a promise.

Strategies to Train on Enterprise Data—Safely

There is no zero-risk option, but risk can be managed.

1. Prefer Retrieval Over Training (When Possible)

Retrieval-Augmented Generation (RAG) keeps data:

Outside the model

Queried at inference time

Under enterprise control

Advantages:

No permanent memorization

Easier access revocation

Clear data boundaries

RAG doesn’t eliminate risk—but it significantly reduces it.

2. Use Private or Isolated Model Environments

For sensitive data:

Avoid shared public models

Use tenant-isolated deployments

Consider on-prem or VPC-hosted models

Isolation limits blast radius.

3. Apply Data Minimization Aggressively

Ask before training:

Does the model need this exact data?

Can it be summarized, anonymized, or abstracted?

Can identifiers be removed?

Models rarely need raw secrets to be effective.

4. Differential Privacy and Noise Injection (With Caution)

Differential Privacy (DP):

Adds noise to reduce memorization

Limits leakage from rare examples

Trade-offs:

Reduced accuracy

Complex tuning

Not a standalone solution

DP is powerful—but not trivial to deploy correctly.

5. Strict Prompt and Context Controls

At inference time:

Classify inputs

Redact sensitive fields

Enforce session isolation

Log and audit usage

Most leaks occur during use, not training.

6. Contractual and Technical Safeguards with Vendors

If using third-party LLMs:

Explicit data retention clauses

Opt-out of training

Audit rights

Clear deletion guarantees

Legal controls must match technical reality.

The Governance Layer: Where Most Organizations Fall Short

Technical safeguards fail without governance.

Enterprises need:

Clear AI data classification policies

Defined “no-train” data categories

Approval workflows for fine-tuning

Ongoing audits and red teaming

Privacy is not a one-time checkbox—it is a continuous discipline.

Can You Ever Eliminate the Risk Completely?

Short answer: No.

Any system that learns from data carries some risk of leakage.

The real question is:

Is the risk understood, measured, and acceptable relative to the value gained?

Mature organizations shift from:

“Is this safe?”

to“Is this safe enough, given our controls and objectives?”

The Strategic Trade-Off

Organizations that refuse to use enterprise data:

Fall behind in AI capability

Rely on generic, undifferentiated models

Organizations that ignore privacy:

Risk regulatory fines

Lose customer trust

Expose trade secrets

The winners navigate the middle path:

Controlled learning

Strong isolation

Clear governance

Continuous monitoring

Key Takeaways

The Privacy Paradox has no perfect resolution—but it does have better and worse architectures.

Enterprises that succeed with AI will not be those with:

The most data

The biggest models

But those with:

The clearest boundaries

The strongest controls

The most disciplined approach to learning from data

Training on enterprise data is possible—but only if privacy is designed in, not hoped for.